How to deal with API rate limits

When I first had the idea for this post, I wanted to provide a collection of actionable ways to handle errors caused by API rate limits in your applications. But as it turns out, it’s not that straightforward (is it ever?). API rate limiting is a minefield, and at the time of writing, there are no published standards in terms of how to build and consume APIs that implement rate limiting. And while I will provide some code solutions in the second half of this post, I want to start by discussing why we need rate limits and highlight some of the inconsistencies you might find when dealing with rate-limited APIs.

Why do rate limits exist?

From the late 1990s to the early 2000s, the use of Software-as-a-Service (SaaS) tools was not mainstream. Authentication, content management, image storage, and optimization were painstakingly hand-crafted in-house. Frontend and backend weren’t separate entities or disciplines. The use of APIs as a middle layer between frontend and backend wasn’t common; database calls were made directly from page templates.

When the need (and desire) for separation between the front and backend emerged, so did APIs as a middle layer. But these APIs were also built, scaled, and managed in-house while being hosted on physical servers on business premises. Development teams decided how to rate limit their own APIs if there was a need. Fast forward to the mid-2010s and the SaaS ecosystem is packed full of headless, serverless, cloud-based tools for anything and everything.

And with SaaS APIs being publicly available to everyone, rate limiting was introduced as a traffic management strategy, on top of other low-level DDoS mitigation measures. It exists to maintain the stability of APIs and to prevent users (good or bad actors) from exhausting available system resources. Rate limiting is also part of a SaaS pricing model; pay a higher subscription fee and receive more generous limits.

So, how do we deal with being rate-limited by the APIs we consume?

HTTP response status codes are varied and inconsistent

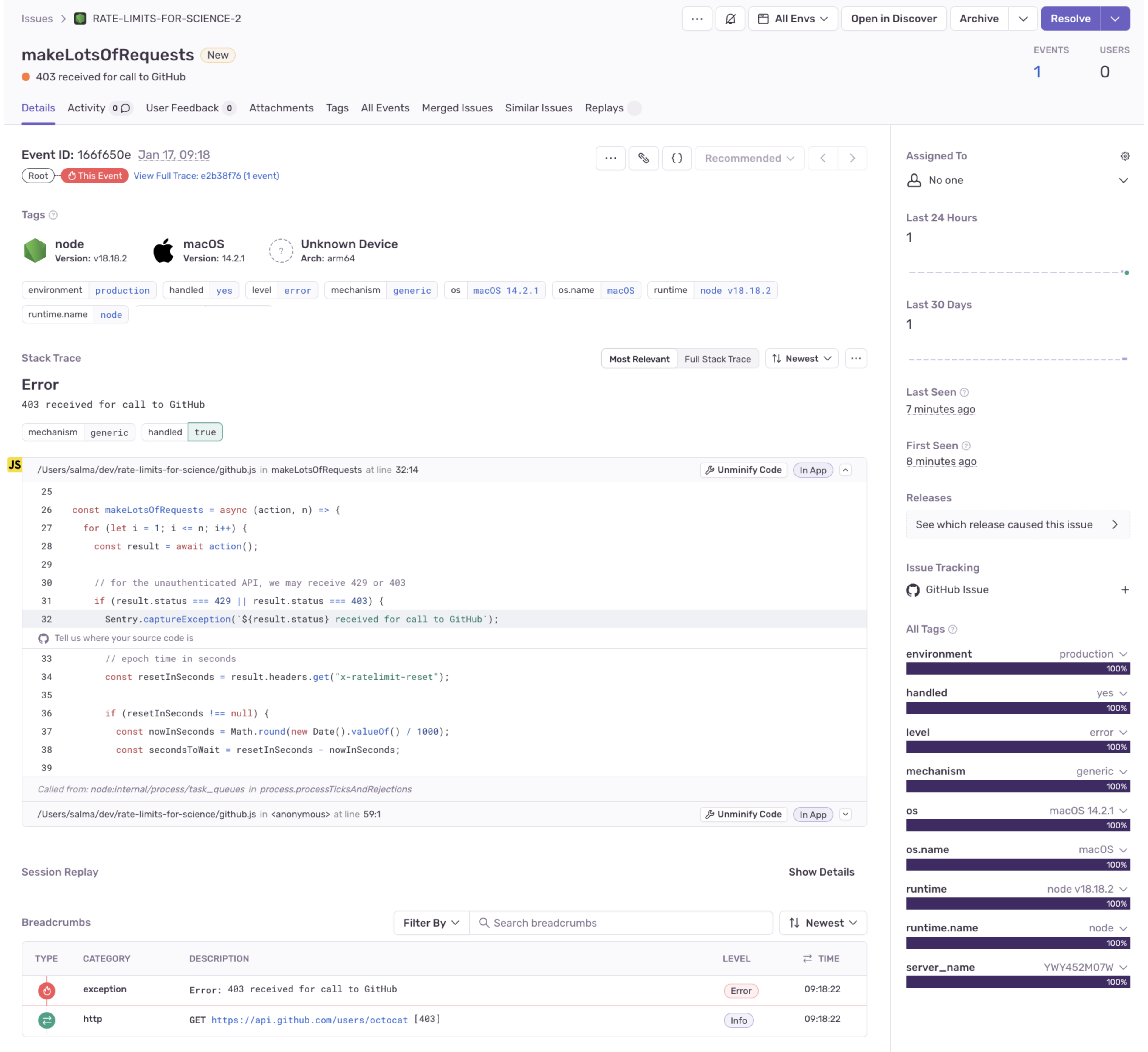

The standard HTTP response code to send with a rate-limited response is HTTP 429 Too Many Requests. However, given that you might be rate-limited according to whether or not you are authorized to make a particular number of requests (perhaps your API pricing model has rate-limited you), you may receive a HTTP 403 Forbidden. I experienced this when I was doing some testing using the GitHub API as an unauthenticated user.

Whilst this is a valid use of a 403 HTTP, it suggests that, technically, there could be other valid HTTP status codes to return in a rate-limited API response other than 429. In this case, even 418 I’m a teapot could be a valid HTTP status code, given that “some websites use this response for requests they do not wish to handle.” In conclusion, when you’re working with rate-limited APIs and you want to evaluate the HTTP response status in code, you may need to do some testing to work out the full scope of HTTP responses you may receive in different cases. The same goes for HTTP response headers.

HTTP response headers could be any combination of key-value pairs

When consuming an API that implements rate limiting, you should receive a set of response headers with more information about the rate limit on each request, (whether or not your request has been allowed or denied) such as how many requests you have remaining in any given time period and when the number of requests is reset to the maximum.

As stated in a draft proposal from the Internet Engineering Task Force, across the APIs I have consumed and tested for this post, I found that “there is no standard way for servers to communicate quotas so that clients can throttle its requests to prevent errors”. For example:

| API | Header key | Header value |

|---|---|---|

| GitHub | X-RateLimit-Reset | epoch timestamp of expiration |

| Sentry | X-Sentry-Rate-Limit-Reset | epoch timestamp of expiration |

| OpenAI | X-RateLimit-Reset-Requests | time period in minutes and seconds after which the limit is reset (e.g. 1m6s) |

| Discord | X-RateLimit-Reset | epoch timestamp of expiration |

| Discord | X-RateLimit-Reset-After | time duration in seconds |

| Discord | Retry-After | time duration in seconds if the limit has been exceeded |

With this many differences across just four APIs, and in the absence of standards around this, HTTP response headers relating to rate limiting could, theoretically, be any combination of key-value pairs.

What to do when you’re being rate-limited

When using APIs that implement rate limits, you can use a combination of the HTTP status code and HTTP response headers to determine how to handle responses to prevent unexpected errors. But should you always retry an API call? As usual, it depends, but it can be useful to consider:

Rate limit thresholds: Can you retry after one second, or do you need to wait for 60 minutes?

Request urgency: Does one request need to be completed successfully before another can be made? If so, see point 1.

Application type: Can you provide feedback to a user to ask them to try again in N minutes rather than providing a seemingly infinite loading spinner whilst the request is retried in the background?

Use HTTP 429 with available response headers

Say (very hypothetically) you want to request information for 100 GitHub users on the client, and you don’t have access to a server to hide your authentication credentials. When using GitHub API in unauthenticated mode, you are limited to making 60 API calls per hour. In this scenario, you’d hit the rate limit immediately, and not be able to recover for 60 minutes. Realistically, you wouldn’t do something like this in a large-scale production app, but here’s how I decided to deal with this case of rate limiting in JavaScript.

If we receive an HTTP status code of 429 or 403, grab the epoch time value from the x-ratelimit-reset header, and work out how long we need to wait. If that time is fewer than five seconds, we can retry after that many seconds (and to be honest, five seconds is probably still too long). Otherwise, we provide feedback about when to manually try again.

async function getArbitraryUser() {

const response = await fetch("https://api.github.com/users/octocat");

return response;

}

// Thanks https://flaviocopes.com/await-loop-javascript/

const wait = (ms) => {

return new Promise((resolve) => {

setTimeout(() => resolve(), ms);

});

};

const makeLotsOfRequests = async (action, n) => {

for (let i = 1; i <= n; i++) {

const result = await action();

// for the unauthenticated API, we may receive 429 or 403

if (result.status === 429 || result.status === 403) {

// epoch time in seconds

const resetInSeconds = result.headers.get("x-ratelimit-reset");

if (resetInSeconds !== null) {

const nowInSeconds = Math.round(new Date().valueOf() / 1000);

const secondsToWait = resetInSeconds - nowInSeconds;

// Retry only if we need to wait fewer than 5 seconds

// we *could* be waiting for up to 60 minutes for the limit to reset

if (secondsToWait < 5) {

await wait(secondsToWait * 1000);

} else {

// provide useful feedback to user

console.error(

`HTTP ${result.status}: Sorry, try again later in ${Math.round(

secondsToWait / 60,

)} mins.`,

);

break;

}

}

}

}

};

await makeLotsOfRequests(getArbitraryUser, 100);Retry the request with exponential backoff using HTTP response statuses

In the example above, it’s unrealistic to check the response headers and wait for rate limits to reset. If N clients (from the same IP address) simultaneously wait for the limit to expire and subsequently retry at the same time, the limits would be hit again immediately. This is also known as the thundering herd problem.

Instead of using rate limit headers, you can retry the request arbitrarily, increasing the wait time exponentially for each subsequent request. Here’s a code example in JavaScript. In the doRetriesWithBackOff() function, while the current retries are less than the maximum number of retries we have defined (in this case three) and we haven’t set the retry variable to false after getting a successful response, we continue to retry the request. For each subsequent request, we use the retries variable to generate a higher value for the waitInMilliseconds parameter. Given that we define the retries variable as 0 when the loop begins, the first waitInMilliseconds will also evaluate to 0.

This example only handles receiving an HTTP 200 or 429. You could specify different behaviors depending on other HTTP status codes you expect, or you could send an error to Sentry to monitor the different types of responses your application is receiving to decide whether or not a case is worth implementing in the code. As with the example above, this is a somewhat arbitrary example that probably doesn’t cover all bases, but is intended to give you a starting point if you’re looking for this type of rate limit handling.

const MAX_RETRIES = 3;

function sleep(ms) {

return new Promise((resolve) => setTimeout(resolve, ms));

}

async function doRetriesWithBackOff() {

let retries = 0;

let retry = true;

do {

const waitInMilliseconds = (Math.pow(2, retries) - 1) * 100;

await sleep(waitInMilliseconds);

const response = await fetch("https://api-url.io/get-me-something");

switch (response.status) {

case 200: // Ok, successful

retry = false;

console.log("successful");

break;

case 429: // Too Many Requests

console.log("retrying");

retries++;

retry = true;

break;

default: // Something unexpected happened, stop retrying

console.log("stopping");

retry = false;

// You could send an error message to Sentry here

Sentry.captureException(`${response.status} received for API call to https://api-url.io/get-me-something`);

break;

}

} while (retry && retries < MAX_RETRIES);

}

// Arbitrary loop to call API 500 times for testing purposes

for (let i = 0; i < 500; i++) {

await doRetriesWithBackOff();

}Don’t retry the rate-limited request

It might not always be appropriate to retry a rate-limited request (especially in the case of very hard rate limits, such as when using the unauthenticated GitHub API). In this case, you could reduce the overheads of function execution time and return a friendly message to rate-limited users — including the time they need to wait before manually retrying.

Being rate-limited is one of those “nice to have” problems

If your third-party API tools are rate-limiting your application, it means you have users. And depending on your pricing tier, it could mean you have lots of users. Congratulations! Now go and upgrade your SaaS tool plans.

Originally posted on blog.sentry.io